For nearly two decades, join a reliable event by Enterprise leaders. The VB transform brings people together with real venture AI strategy together. learn more

While the large language model (LLM) has mastered lessons (and somewhat other methods), they have a lack of physical “general knowledge” to work in the dynamic, real-world environment. This has limited the deployment of AI in areas such as manufacturing and logistics, where understanding and effects are important.

The latest model of Meta, V-JP 2Learning a world model from video and physical interaction takes a step towards reducing this difference.

V-JEPA 2 AI can help creating applications, which requires predicting and planning results in unexpected environments with several gains cases. This approach can provide more capable robots in the physical environment and a clear route towards advanced automation.

How to learn how to plan a ‘world model’

Human beings develop physical intuition in life by looking at their environment. If you see a ball throwing, you easily know its trajectory and can predict where it will land. V-JEPA 2 learns a uniform “world model”, which is the internal simulation of an AI system of how the physical world operates.

The model is designed at three main capabilities that are required for enterprise applications: understanding what is happening in a scene, predicting how the view will change on an action basis, and planning a sequence of tasks to achieve a specific goal. As stated in meta BlogIts “long -term vision is that world models will enable AI agents to plan and reasons in the physical world.”

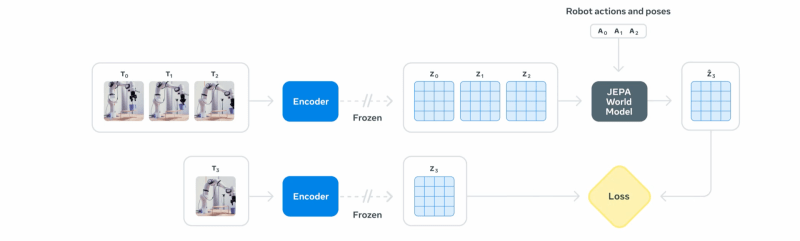

The architecture of the model, which is called video joint embeding Predictive Architecture (V-JEPA), consists of two major parts. A “encoder” sees a video clip and condenses it in a compact numeric summary, known as one EmbedingIt catches the necessary information about objects and their relationships in the embeding scene. A second component, “prophet”, then takes this summary and imagines how the scene will develop, what the next summary will look like, it will produce a prediction of what the next summary will look.

This architecture is the latest development of JEPA framework, which was first applied to images I-JEPA And now carries forward the video, performing a consistent approach to the creation of the world’s model.

Unlike the generous AI model that tries to predict the exact color of each pixel in the future frame-a computationally intensive work-V-JEPA 2 operates in an abstract place. This focuses on predicting the high-level characteristics of a view, such as the position and trajectory of an object, instead of its texture or background details, it only becomes far more efficient than other large models on 1.2 billion parameters.

It translates into low calculation costs and makes it more suitable for deployment in real -world settings.

Learn from observation and action

The V-Jepa 2 is trained in two stages. First of all, it creates its fundamental understanding of physics Self-review learningWatching unwanted internet videos of over a million hours. Just seeing how the object walks and interacts, it develops a common-purpose world model without any human guidance.

In the second phase, this pre-educated model is fine on a small, special dataset. Showing a robot performing functions by processing only 62 hours of video, with this control command, the V-JEPA 2 learns to connect with its physical results. It is a model that can plan and control tasks in the real world.

This two-step training enables a significant ability to the real-world automation: zero-shot robot scheme. A robot operated by the V-JEPA2 can be deployed in a new environment and without the need to retreat for that specific setting, items can be successfully manipulated before.

This is an important advance on previous models that require training data perfect Robots and environment where they will work. The model was trained on an open-source dataset and then successfully deployed on various robots in meta laboratories.

For example, to complete the task such as taking an object, the robot is given a target image of the desired result. It then uses the V-JEPA2 prophet to simulate a series of potentially potentially potential next moves. It scores each imagined action on the basis of how close it becomes to the target, executing the top-rested action, and repeats the process until the work is completed.

Using this method, the model achieved success rates between 65% and 80% on pick-end-plas tasks with unfamiliar objects in new settings.

Real world influence of physical logic

This ability to plan and work in novel conditions has direct implications for business operations. In logistics and manufacturing, it allows for more adaptable robots that can handle varying in products and warehouses without wide reprograming. This can be particularly useful because companies are searching for deployment Humoid robot In factories and assembly lines.

The same world model can give strength to extremely realistic digital twins, allowing companies to imitate new processes or to train other AIs in an physically accurate virtual environment. In industrial settings, a model can monitor the video feed of machinery and, depending on its learned understanding of physics, before predicting safety issues and failures.

This research is an important step that Meta says “Advanced Machine Intelligence (AMI)”, where AI system “can learn about the world as humans do, plan to execute unfamiliar tasks, and efficiently suit the changing world around us.

Meta has released the model and its training code and “expecting a comprehensive community around this research that is progressing towards our ultimate goal of developing the world model that can change the way the AI can interact with the physical world.”

What does this mean for enterprises technical decision making

The V-JEPA 2 takes robotics closer to software-defined models that already identify the cloud teams: East-train once, deploy anywhere. Because the model learns general physics from public videos and requires only a few dozen hours of work-specific footage, enterprise can reduce data collection cycles that usually reduce pilot projects. In practice, you can prototype a pick-and-place robot on an inexpensive desktop arm, then roll the same policy on an industrial rig on the floor of the factory without collecting thousands of fresh samples or writing a custom motion script.

Low training overhead also shapes the cost equation again. In 1.2 billion parameters, the V-JEPA 2 fits comfortably on a single high-end GPU, and its abstract prediction goes forward. This allows teams to run on closed-loop controls or on the edge, to avoid the headache coming out with cloud delay and streaming video outside the plant. The budget that had once gone into a large -scale compute clusters can fund additional sensors, excess, or rapid recurrence cycles.

Source link