Nvidia Rolling its AI chips to data centers and what it says to AI factories and company worldwide Announced Today its blackwell chips are leading the AI benchmark.

NVIDIA and its companions are intensifying training and deployment of next generation AI applications that use the latest progress in training and estimates.

NVIDA Blackwell Architecture is designed to meet the increased performance requirements of these new applications. The 12th-Nvidia AI platform since the introduction of the benchmark in the latest round of MLPERF training in the latest round of the training gave the highest performance on each benchmark and operated every result presented on the benchmark’s most difficult large language model (LLM) -Focuse test: Lalama 3.1 405B featorying.

The NVIDIA platform was the only one that presented the results on each MLPERF training V5.0 benchmark – underlining its extraordinary performance and versatility in a wide array of AI workload, lLMS, recommended system, multimodal LLMS, object detection and graph nerve network.

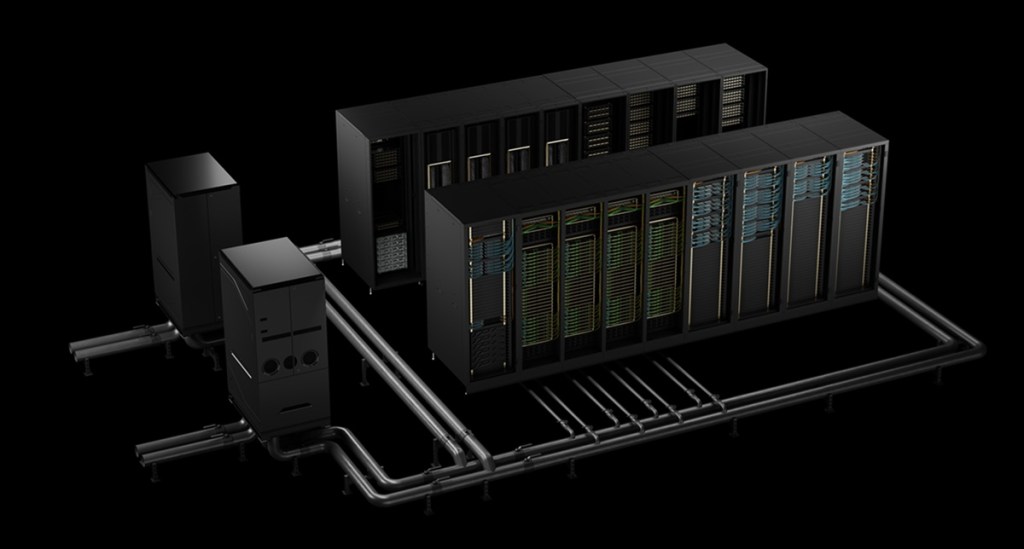

The AT-scal submission created using the NVidia GB200 NVL72 rack-scale system, and NYX using the NVIDIA GB200 NVL72 Rack-Scale System, based on the NVIDIA DGX B200 system. In addition, NVIDIA collaborated with the GB200 NVL72 results with a total of 2,496 Blackwell GPU and 1,248 NVidia Grace CPUS to present the GB200 NVL72 results with the correvave and IBM to present the GB200 NVL72 results.

On the new Lama 3.1 405B Pretrning Benchmark, Blackwell gave 2.2 times more performance than the previous generation architecture on the same scale.

LLAMA 2 70B Lura Loor-Turning Benchmark, Nvidia DGX B200 system, operated by eight blackwell GPU, gave 2.5 times more performance than a submission with a submission using the same number of GPU in the east round.

These performances highlight the progress in blackwell architecture, including high-density liquid-cooled racks, 13.4TB per rack, fifth generation Nvidia NVLINC and NVLINC NVLINC NVLIC NVLCH Switch Switch Interconnect Technologies Scale-Up and Nvidia Quantum-2 NVDia Quantum-2 NVIDIA NVIDIA NVIDIA NVIDIA NVIDIA NVIDIA for Squiniband Network Interconnect technology includes. In addition, innovation in NVIDIA Nemo Framework Software Stack enhances the next generation multimodal LLM training, which are important to bring agents AI applications to the market.

These agents will run a day in the AI-Interested Application AI factories. These new applications will produce tokens and valuable intelligence that can be applied to almost every industry and educational domains.

The Nvidia data center platforms include GPU, CPU, high-speed fabrics and networking, as well as a huge array of software like NVIDIA CUDA-X Libraries, Nemo Framework, Nvidia Tensorrt-LLM and Nvidia Dynamo. This highly tuned of hardware and software technologies strengthens to train and deploy models more quickly, sharply faster the time for value.

The NVidia partner ecosystem participated extensively in this MLPERF round. Beyond presenting with Coreweave and IBM, other compelling performances were from Asus, Cisco, Giga Computing, Lambda, Lenovo Quanta Cloud Technology and Supermacro.

The first MLPERF training submission using GB200 was developed by more than 125 members and colleagues by the MlcomMons Association. Its time-to-train metric ensures that the training process produces a model that meets the required accuracy. And its standardized benchmark run rules ensure comparison of the performance of apple-to-apple. The colleagues are reviewed before the results publication.

Basics on Training Benchmark

Dave Salveter is the one I knew when he was part of the tech press. He is now director of quick computing products in the quick computing group in NVIDIA. In a press briefing, Salvetor said that NVidia CEO Jensen Huang talks about this perception of type of scaling laws for AI. They include pre -training, where you are basically teaching AI model knowledge. It is starting from zero. This is a heavy computational lift that is the backbone of AI, the Salveter said.

From there, Nvidia goes into scaling after training. This is where the models go to school, and it is a place where you can do things like fine tuning, for example, where you bring a pre-educated model to a separate data set to teach, which has been trained to a point, to give it an additional domain knowledge of your special data set.

And then finally, there is time-testing scaling or argument, or sometimes called long thinking. This is the second word agent AI. It is AI that can actually think and solve the cause and problem, where you basically ask a question and get a relatively simple answer. Testing time scaling and logic can actually work on very more complex tasks and provide rich analysis.

And then there is also a generative AI that can generate material on a required basis that can include text summary translation, but then visual material and even audio materials. There are many types of scaling that run in the AI world. For benchmarks, Nvidia focused on pre-training and post-training results.

“This is where AI begins that we call AI’s investment phase. And then when you inferuish and deploy those models and then originally generate those tokens, this is the place where you start getting your return on your investment in AI,” he said.

The Mlperf Benchmark is in its 12th round and it is back to the dates of 2018. It has more than 125 members in support and has been used for both estimate and training tests. The industry sees the benchmark as stronger.

“I am sure many of you know, sometimes the claims of performance in the AI world may be a bit of Wild West. The MLPRF tries to bring some orders for that chaos,” the Salveter said. “Everyone has to work for the same amount. All are held for the same standard in terms of convergence. And once the result is presented, those results are reviewed and all other submitrs are vetted, and people can ask questions and even challenge the results.”

The most comfortable metric around training is how much time it takes to train an AI model called a convergence. This means correcting a specified level of accuracy. This is an apple-to-apple comparison, the salveter said, and it takes into account the constantly changing work.

This year, a new lama is 3.140 5B workload, replacing the choppat 170 5B workload which was earlier in the benchmark. In the benchmark, Salvetors said Nvidia had several records. NVIDIA GB200 NVL72 AI is fresh from factory construction factories. From a generation of chips to the next (Blackwell), Nvidia saw 2.5 times the improvement for the results of the image generation.

“We are still quite early in the Blackwell product life cycle, so we expect to fully get more performance from the blackwell architecture over time, as we continue to refine our software adaptation and come to the market clearly,” the Salveter said.

He said that NVIDIA was the only company to present entries for all benchmarks.

“The great performance we are receiving comes through a combination of things. It takes our fifth-jewelery NVLC and NVSwich to 2.66 times more performance, as well as other general architectural goodness in Blackwell with our ongoing software optimization, which makes the performance possible,” said the Salveter.

He said, “Due to Nvidia’s legacy, we have been known for the longest time as those GPU people. We definitely make great GPU, but we have not just gone from being a chip company, not only to be a system company with things like our DGX server, which are working fully with our rack design, which are fully helping our partner, which is fully helping to help our partner, We are then referred to as AI factories.